BY STEVEN SHAVIRO

In the past few decades, something has happened to the way that we engage with sounds and images. There has been a change in audiovisual media. Electronic technologies have replaced mechanical ones, and analog forms of coding, storage, and transmission have given way to digital ones. These developments are correlated with new ways of seeing and hearing, and of combining seeing and hearing. We have contracted new habits, and entertained new expectations. A new audiovisual aesthetic is now emerging. In what follows, I attempt to describe this new aesthetic, to speculate about its possible causes, and to work through its potential implications.

1. The Audiovisual Contract

During the middle third of the twentieth century, sound cinema established what Michel Chion calls “the audiovisual contract”: a basic paradigm for the relationship between sounds and moving images. In both classical and modern cinema, sound brings “added value” to the image: “a sound enriches a given image” in such a way that it seems to us as if the added “information or expression” were “already contained in the image itself” (5). That is to say, cinema sound is supplemental, precisely in Jacques Derrida’s sense of this word: “an addition [that] comes to make up for a deficiency . . . to compensate for a primordial non-self-presence” (lxxi). We rarely pay attention to film sound in and of itself; we always regard it as secondary to the images of the film. And yet it turns out, again and again, that sound endows those images with a potency, a meaning, and a seeming self-sufficiency, that they never could have established on their own. “Added value,” Chion says, “is what gives the (eminently incorrect) impression that sound is unnecessary, that sound merely duplicates a meaning which in reality it brings about” (5).

It can be plausibly argued that this was already the case, through a sort of anticipation, even in the era of silent film. As Mary Ann Doane suggests, silent film was understood even in its own time “as incomplete, as lacking speech” (33). The missing voice plays a crucial role in silent film; for, denied any direct expression, it “reemerges in gestures and the contortions of the face—it is spread over the body of the actor” (Doane 33). In this way, speech already plays a supplemental role in cinema from the very beginning; by its very absence, it underwrites the seeming autonomy of moving visual images. In addition, most silent films were shown with live musical accompaniment. Chion discusses at length the way that soundtrack music and ambient noises temporalize the sound film, giving it a sense of forward movement, and of duration (13-21). But musical accompaniment already performs this service for silent film. (Indeed, most “silent” films are excruciatingly difficult to watch in actual silence). We can conclude from all this that the audiovisual contract was already in effect, to a large extent, even in the silent era. When the talkies finally arrived, sound had a place marked out for it. It was already doomed to be supplemental. It immediately functioned—as Deleuze puts it, citing and extending Chion—not as an independent sensorial source, but rather “as a new dimension of the visual image, a new component” (Cinema 2 226).

Mainstream cinema since the talkies has generally synchronized sound to image—as so many film theorists have noted and lamented. Despite the fact that sounds and images are recorded on separate devices, and that many sounds are added in postproduction, the dominant tendency has always been to create the illusion that the image track and the soundtrack coincide naturally. “The voice must be anchored by a given body,” and “the body must be anchored in a given space” (Doane 36). Even non-diegetic soundtrack music is naturalized; for the role of the soundtrack’s “unheard melodies” is to blend seamlessly into the visual action, and thereby subliminally instruct us in how to understand and feel the images (Gorbman). This demand for naturalism is the basis for sound’s traditionally supplemental role in the movies.

Of course, every dominant practice inspires a counter-practice. The illusionistic synchronization of sound to image has long been opposed by radical filmmakers and film theorists. Already in 1928, Eisenstein denounced the “adhesion” of sound to image in Hollywood film, and demanded instead “a contrapuntal use of sound . . . directed along the line of its distinct non-synchronization with the visual images” (258). Eisenstein was never able to put his ideas about sound montage into practice; but, starting in the 1960s, directors like Jean-Luc Godard, Marguerite Duras, and Jean-Marie Straub and Danièle Huillet experimented with separating sound from image, and giving sound its own autonomy as a source of perceptions and of information. They demonstrated the arbitrariness of synchronization, and explored the possibilities of setting sounds and images free from one another, or even directly against one another. As Deleuze puts it, in the films of these directors “talking and sound cease to be components of the visual image; the visual and the sound become two autonomous components of an audio-visual image, or better, two heautonomous images” (Deleuze, Cinema 2 259).[1]

I do not wish to minimize the importance of these dialectical explorations. But we should not exaggerate their novelty either. Fundamentally, the films of Godard, Duras, and Straub/Huillet still belong to the traditional cinematic regime in which images are primary, and sound only provides a supplemental added value. Modernist films may well call attention to the arbitrariness of sound-image relations, instead of dissimulating this arbitrariness. But these films do not actually alter the terms of the underlying audiovisual contract. In positing sound as an independent “image,” and making the role of sound (as it were) “visible,” they point up a certain way that cinema functions—but they do not thereby actually change this mode of functioning.

This is part of the general malaise of modernism. Twentieth-century aesthetics grossly overestimated the efficacy, and the importance, of alienation-effects, self-reflexive deconstructions, and other such demystifying gestures. Aesthetically speaking, there is nothing wrong with these gestures; they are often quite beautiful and powerful. I am second to no one in my admiration for Two or Three Things I Know About Her and India Song. But we should not deceive ourselves into thinking that these films somehow escape the paradigms whose mechanisms they disclose and reflect upon. They still largely adhere to the audiovisual contract—as Chion explicitly notes in the case of India Song, where “the sounds of the film congregate around the image they do not inhabit, like flies on a window pane” (158). The audiovisual contract allows both for the seamless combination of sounds and images, and for their more or less violent disjunction. Sound can add value to a visual presentation, Chion says, “either all on its own or by discrepancies between it and the image” (5). The sound fulfills its supplemental function either way, energizing the images while remaining secondary to them.

2. From Film to Video

In recent years, however, post-cinematic media have altered the terms of the familiar audiovisual contract.[2] Today, audiovisual forms no longer operate in the same ways that they used to. “In the cinema,” Chion says, “everything passes through an image”; but television and video work instead by “short-circuiting the visual” (158). The technological transformations from mechanical to electronic modes of reproduction, and from analog to digital media, have accomplished what avant-garde cinematic practices could not: they have altered the balance between images and sounds, and instituted a new economy of the senses. The new media forms have affected what Marshall McLuhan (taking the term from William Blake) calls the “ratio of the senses.” For McLuhan, new media always “alter sense ratios or patterns of perception” (McLuhan 18). Indeed, “any invention or technology is an extension or self-amputation of our physical bodies, and such extension also demands new ratios or new equilibriums among the other organs and extensions of the body” (45). When media change, our sensorial experiences also change. Even our bodies are altered—extended or “amputated”—as we activate new potentialities, and let older ones atrophy.

Specifically, McLuhan claims that, as mechanical and industrial technologies give way to electronic ones, we move away from a world defined by “segmentation and fragmentation,” and into “a brand new world of allatonceness.” (McLuhan 176; McLuhan and Fiore 63). Mechanical technologies, from Gutenberg’s printing press to Ford’s assembly line, broke down all processes into their smallest components, and arranged these components in a strict linear and sequential order. But electronic technologies invert this tendency, creating patterns and fields in which processes and their elements happen all together.

This transition also entails a reordering of the senses. When we leave mechanical technologies behind, we move away from a world that gives itself to the eye, and that is organized around the laws of Renaissance perspective (McLuhan and Fiore 53). We move instead into a world that no longer privileges sight: “an acoustic, horizonless, boundless, olfactory space” in which “purely visual means of apprehending the world are no longer possible.” (McLuhan and Fiore 57; 63). Of course, this doesn’t mean that we will stop reading words and looking at images.[3] But however much time we spend today looking at multiple screens, we can no longer privilege the model of a disembodied eye, detached from, and exerting mastery over, all it sees. Electronic media foster “audile-tactile perception,” an interactive and intermodal form of sensibility, no longer centered upon the eye (McLuhan 45). This is one reason why video is significantly different from film, even when we watch movies on our video devices. Walter Benjamin famously wrote that “it is another nature which speaks to the camera as compared to the eye. . . . It is through the camera that we first discover the optical unconscious” (266). But when cinematic mechanical reproduction is displaced by video-based electronic reproduction, such ocularcentrism is no longer valid. The sound recorder becomes as important as the camera. We discover, not an optical unconscious, but a thoroughly audiovisual one.

Of course, this change is not thoroughgoing or total. For one thing, the transformation of media forms is still in process. For another, new media and new sensorial habits do not usually obliterate older ones, but tend instead to be layered on top of them. For instance, very few people use typewriters any longer; but computer keyboards continue to be modeled after typewriter keyboards. Similarly, lots of people still go to the movies; and lots of newer video and digital moving-image works continue to be modeled after the movies. Traditional movies continue to be made, even as they increasingly rely upon post-cinematic (electronic and digital) technologies for production, distribution, and exhibition. In both contemporary Hollywood films and contemporary art films, sound still often functions as it used to, providing added value for the moving images.

Nevertheless, electronic media work quite differently than the movies did. Video and television tend to bring sound into greater prominence. Chion goes so far as to suggest that television is basically “illustrated radio,” in which “sound, mainly the sound of speech, is always foremost. Never offscreen, sound is always there, in its place, and does not need the image to be identified” (157). In these electronic media, the soundtrack takes the initiative, and establishes meaning and continuity. The televisual image, on the other hand, “is nothing more than an extra image,” providing added value, and supplementing the sound (158). Images now provide an uncanny surplus, subliminally guiding the ways that we interpret a foregrounded soundtrack. In the passage from cinema to television and video, therefore, audiovisual relations are completely inverted.

Chion also argues that electronic scanning, the technological basis of television and video, changes the nature of visual images themselves. Where cinema “rarely engages with changing speeds and stop-action,” video does this frequently and easily. “Film may have movement in the image”; but “the video image, born from scanning, is pure movement” (162). The intrinsic “lightness” of video equipment replaces the “heaviness” of the cinematic apparatus (163). All this leads to yet more paradoxical inversions. For “the rapidity and lability of the video image” work to “bring [it] closer to the eminently rapid element that is text” (163). There is a certain “visual fluttering” in video, a speeding up that makes it into “visible stuff to listen to, to decode, like an utterance” (163). This means that “everything involving sound in film—the smallest vibrations, fluidity, perpetual mobility—is already located in the video image” as well (163). In other words, where classical cinema subordinated sound to image, and modernist cinema made sound into a new sort of image, in television and video visual images tend rather to approach the condition of sound.

3. From Analog to Digital

The ratio of the senses—the balance between eye and ear, or between images and sounds—has also been altered by the massive shift, over the course of the last two decades, from analog to digital media. Digitization undermines the traditional hierarchy of the senses, in which sight is ranked above hearing. On a basic ontological level, digital video consists in multiple inputs, all of which, regardless of source, are translated into, and stored in the form of, the same binary code. This means that there is no fundamental difference, on the level of raw data, between transcoded visual images and transcoded sounds. Digital processing treats them both in the same way. Digitized sound sources and digitized image sources now constitute a plurality without intrinsic hierarchy. They can be altered, articulated, and combined in numerous ways. The mixing or compositing of multiple images and sounds allows for new kinds of juxtaposition and rhythmic organization: effects that were impossible in pre-digital film and television. These combinations may even work on the human sensorium in novel ways, arousing synesthetic and intermodal sensory experiences. Digital technologies thus appeal to—and also arouse, manipulate, and exploit—the fundamental plasticity of our brains (Malabou). They can do this because, as McLuhan says, they do not just exteriorize one or another human faculty, but constitute “an extension,” beyond ourselves, “of the central nervous system” in its entirety (McLuhan and Fiore 40).

Digitization reduces sounds and images alike to the status of data or information. Images and sounds are captured and sampled, torn out of their original contexts, and rendered in the form of discrete, atomistic components. Additional components, with no analog sources at all, may also be synthesized at will. All these components, encoded as bits of information, can then be processed and recombined in new and unexpected ways, and then re-presented to our senses. In their digital form, no source or component can be privileged over any other. Digital data conform to what Manuel DeLanda calls a flat ontology: one that is without hierarchy, “made exclusively of unique, singular individuals, differing in spatio-temporal scale but not in ontological status” (47). Digital information is organized according to what Lev Manovich calls a database logic: “new media objects do not tell stories; they do not have a beginning or end; in fact, they do not have any development, thematically, formally, or otherwise that would organize their elements into a sequence. Instead, they are collections of individual items, with every item possessing the same significance as any other” (218).

Strictly speaking, Manovich’s point is not that narrative ceases to exist in digital media, but that its role is secondary and derivative. All the elements deployed in the course of a narrative must first be present simultaneously in the database. The database therefore pre-defines a field of possibilities within which all conceivable narrative elements are already contained. And this is why, “regardless of whether new media objects present themselves as linear narratives, interactive narratives, databases, or something else, underneath, on the level of material organization, they are all databases” (228). The temporal unfolding of narrative is subordinated to the permutation and recombination of elements in a synchronic structure.

This structural logic of the database has several crucial consequences. For one thing, digital sampling and coding takes precedence over sensuous presence. Not only do all sounds and images have equal status; they are also all subordinated to the informational structure in which they are stored. Images and sounds are stripped of their sensuous particularity, and abstracted into a list of quantitative parameters for each pixel or slice of sound. These parameters do not “represent” the sounds and images to which they refer, so much as they are instructions, or recipes, for reproducing them. As a result, sounds and images are not fixed once and for all, but can be made subject to an indefinite process of tweaking and modulation. In addition, sounds and images can be retrieved at will, in any order or combination. Even in the case of older media forms like classic films, digital technologies allow us to speed them up or slow them down, to jump discontinuously from one point in their temporal flow to another, or even—as Laura Mulvey has recently emphasized—to halt them entirely, in order to linger over individual movie frames. Databases allow in this way for random access, because their underlying order is simultaneous and spatial. In digital media, time becomes malleable and manageable; Bergson would say that time has been spatialized.

4. Out of Time and Into Space

The movement from narrative organization to database logic is just one aspect of a much broader cultural shift. Along with the transitions from cinema to video, and from analog technologies to digital ones, we have moved (in William Burroughs’s phrase) “out of time and into space” (158). The modernist ethos of duration and long-term, historical memory has given way to an ethos of short-term memory and “real-time” instantaneity. This shift has been widely noted by social and cultural theorists. Already in the 1970s, Daniel Bell argued that “the organization of space . . . has become the primary aesthetic problem of mid-twentieth-century culture, as the problem of time . . . was the primary aesthetic concern of the first decades of this century” (107). Fredric Jameson’s early-1980s reading of “the cultural logic of late capitalism” sees our culture as being “increasingly dominated by space and spatial logic”; as a result, “genuine historicity” becomes unthinkable, and we must turn instead to a project of “cognitive mapping” in order to grasp “the bewildering new world space of late or multinational capital” (Jameson 25; 19; 52; 6). More recently, in his survey of turn-of-the-century globalization, Manuel Castells has argued that “space organizes time in the network society” (407).

Any audiovisual aesthetics must come to terms with this new social logic of spatialization. How do relations between sound and image change when we move out of time and into space? In the first place, it is evident that images are predominantly spatial, whereas sounds are irreducibly temporal. You can freeze the flow of moving images in order to extract a still, but you cannot make a “still” of a sound. For even the smallest slice of sound implies a certain temporal thickness. Chion says that “the ear . . . listens in brief slices, and what it perceives or remembers already consists in short syntheses of two or three seconds of the sound as it evolves” (12). These syntheses correspond to what William James famously called the specious present:

The practically cognized present is no knife-edge, but a saddle-back, with a certain breadth of its own on which we sit perched, and from which we look in two directions into time. The unit of composition of our perception of time is a duration. . . . We seem to feel the interval of time as a whole, with its two ends embedded in it. (574)

Such a duration-block, “varying in length from a few seconds to probably not more than a minute,” constitutes our “original intuition of time” (James 603).

Chion suggests, along these lines, “that everything spatial in a film, in terms of image as well as sound, is ultimately encoded into a so-called visual impression, and everything which is temporal, including elements reaching us via the eye, registers as an auditory impression” (136).[4] Sound has the power to temporalize an otherwise static flow of cinematic images, precisely because “sound by its very nature necessarily implies a displacement or agitation, however minimal” (9-10). The logic of spatialization would therefore seem to imply a media regime in which images were dominant over sounds.

However, the fact that hearing is organized into “brief slices,” or discrete blocks of duration, means that, according to Chion, hearing is in fact atomized, rather than continuous (12). William James similarly writes of the “discrete flow” of our perception of time, or the “discontinuous succession” of our perceptions of the specious present (James 585; 599). Many distinct sounds may overlap in each thick slice of time. In contrast, images cannot be added together, or thickened, in this way. We can easily hear multiple sounds layered on top of one another, while images superimposed upon one another are blurred to the point of illegibility. In addition, cinematic images imply a certain linearity, and hence succession, because they are always localized in terms of place and distance. You have to look in a certain direction to see a particular image. As Chion puts it, cinema “has just one place for images,” which are always confined within the frame (67). Sound, however, frees us from this confinement; “for sound there is neither frame nor preexisting container” (67). Although sound can have a source, it doesn’t have a location. It may come from a particular place, but it entirely fills the space in which it is heard (69).

By entirely filling space, sound subverts the linear, sequential order of visual narrative, and lends itself to the multiplicity of the spatialized database aesthetic. McLuhan always associates the predominance of sound with simultaneity, allover patterns, and “information” as the “technological extension of consciousness” (McLuhan 57). In acoustic space, McLuhan says, “Being is multidimensional and environmental and admits of no point of view” (McLuhan and McLuhan 59). Chion similarly notes that sound promotes the effects of simultaneity and multiplicity in post-cinematic media.[5] In music video, for instance, “the . . . image is fully liberated from the linearity normally imposed by sound” (167). This means that the music video’s “fast montage,” or “rapid succession of single images,” comes to function in a way that “closely resembles the polyphonic simultaneity of sound or music” (167). Precisely because the music video’s soundtrack is already given in advance, we are offered “a joyous rhetoric of images” that “liberates the eye” (166). In cinema, sound temporalizes the image; but in post-cinematic, electronic, and increasingly digital forms like music video, the sound works to release images from the demands of linear, narrative temporality.

5. The Death of Cinema

The movement out of time and into space has crucial ramifications for cinema as a time-bound art. In his recent, beautifully elegiac book The Virtual Life of Film, David Rodowick mourns what he sees as the death of cinema at the hands of electronic and digital technologies. Rodowick argues that cinematic experience is grounded in the closely related “automatisms” of (Bazinian) indexicality and (Bergsonian) duration (41). In both classical and modernist film, every cinematic space “expresses a causal and counterfactually dependent relation with the past as a unique and nonrepeatable duration” (67). That is to say, the space of the film is indexically grounded in a particular span of time past, which it preserves and revivifies. Analog film “always returns us to a past world, a world of matter and existence”; and it thereby allows us to feel “an experience of time in duration” (121). Moreover, cinematic space is actively assembled through the time-dependent processes of camera movement and montage. For both these reasons, cinema presents to us the pastness, and the endurance in time, of actual things.

But according to Rodowick, digital media no longer do this. Where analog photography and cinematography preserved the traces of a preexisting, profilmic reality, digital media efface these traces, by translating them into an arbitrary code.[6] Without the warrant of analog cinema’s indexical grounding, Rodowick says, digital moving-image media are unable to express duration.[7] They are only able to transmit “the expression of change in the present as opposed to the present witnessing of past durations” (136). Indeed, in digital works not only is time undone, but even “space no longer has continuity and duration,” since “any definable parameter of the image can be altered with respect to value and position” (169). In sum, for Rodowick, “nothing moves, nothing endures in a digitally composed world. The impression of movement is really just an impression . . . the sense of time as la durée gives way to simple duration or to the ‘real time’ of a continuous present” (171).

I do not think that Rodowick is wrong to suggest that time plays a different role in electronic and digital media than it did in the cinema. I take it as symptomatic, however, that Rodowick only discusses cinema as a visual medium; his book has almost nothing to say about sound. This is a problem; for even in the indexical, realist cinema valued by Bazin, Cavell, and Rodowick, sounds work quite differently than images do. Images may be understood as indexical traces, or as perceptual evidence of a former presence,[8] but sounds cannot be conceived in this way (Rodowick Virtual 116). This is because of sound’s inability to be contained. Even the simplest and clearest sounds resonate far beyond the bodies or objects that have produced them, and thus can easily be separated from their origins. Also, as Chion reminds us, even the most direct or naturalistic cinematic sound is rendered rather than reproduced (109-17). For these reasons, cinematic sounds can never be indexical traces, and warrants of profilmic reality, in the way that analog cinematic images are.

Chion notes that even classical sound films are filled with “invisible voices,” or with what he calls the acousmêtre: a sound source that is “neither inside nor outside the image,” neither onscreen nor off, but rather haunts the image without being manifested within it (127; 129). Even when sound serves only as “added value,” its phantasmatic effects complicate Rodowick’s sense of “an image expressive of a unique duration that perseveres in time” (Virtual 117). With sound’s increasing prominence in electronic and digital media, the question of audiovisual temporality becomes even more convoluted and complicated. Post-cinematic media may not express Bergsonian or Proustian duration, just as they do not lay claim to indexical realism; but their “spatialized” temporalities may well be more diverse and fertile than Rodowick is willing to allow for.

6. Splitting the Atom

Keeping all these considerations in mind, I would now like to examine audiovisual and space-time relations in one particular recent media object: Edouard Salier’s music video for Massive Attack’s song “Splitting the Atom,” from their 2010 album Heligoland. “Splitting the Atom” is a trancey and mournful song, with a strong reggae-inflected beat that is just a bit too slow to dance to. The sparse, and mostly synthesized, instrumentation is dominated by an organ-like keyboard sound, whose repetitive minor-key chords reinforce the clap-like beat of the percussion. A second, more dissonant synthesizer line plays in the upper registers. The skeletal melody is carried by male vocals that scarcely go above a whisper. Massive Attack co-leader Daddy G sings the first two verses in his extraordinary deep bass voice; Horace Andy’s quivering baritone sings the later verses. The song’s lyrics are atmospheric, opaque, and generally bleak: “The summer’s gone before you know / The muffled drums of relentless flow/ You’re looking at stars that give you vertigo / The sun’s still burning and dust will blow. . . .”

Overall, “Splitting the Atom” is a contemplative, melancholy work. Its steady pulse implies stasis, despite the steadily increasing chaos of the dissonant upper synthesizer register. The song refuses both the dynamic churn of polyrhythmic dance music, and the forward movement of anything that has a narrative. The sound just drifts; it never reaches a climax, and it never really gets anywhere. Indeed, one reviewer complained that “the song doesn’t move toward anything; it just plods quietly along for five minutes” (Breihan). I would argue that, while this is descriptively correct, it is not a bug, but a feature. “Splitting the Atom” is profoundly autumnal. It stands on the verge of incipient change, but without actually yielding to it. It seems to be poised at the moment of impending death, barely holding on in the face of oblivion. “It’s easy, / Don’t let it go,” the singers exhort us in the chorus; “Don’t lose it.” But despite this suggestion of resistance, the overall sound of the song seems already resigned to loss. “Splitting the Atom” is dedicated to endurance in the face of pain, or simply to maintaining oneself in place—as if this were the best that we could hope for.

Salier’s video does not attempt, in any direct way, to illustrate the song’s lyrics, or even to track its musical flow. But in its own way, it responds to the song’s dampened affect, its bleak vision, and its sense of stasis before catastrophe. The video is entirely computer-generated, and almost entirely in grayscale. It implies a narrative, without explicitly presenting one. And although the video simulates camera movement, the space through which the virtual camera seems to move is itself frozen in time, motionless. Something terrible has just happened, or is on the verge of happening; but we cannot tell exactly what it is. The director’s own description of the video is cryptic in the extreme:

The fixed moment of the catastrophe. The instant the atom bursts on the beast, the world freezes into a vitrified chaos. And we go through the slick and glistening disaster of a humanity in distress. Man or beast? The responsibility of this chaos is still to be determined. (van Zon)

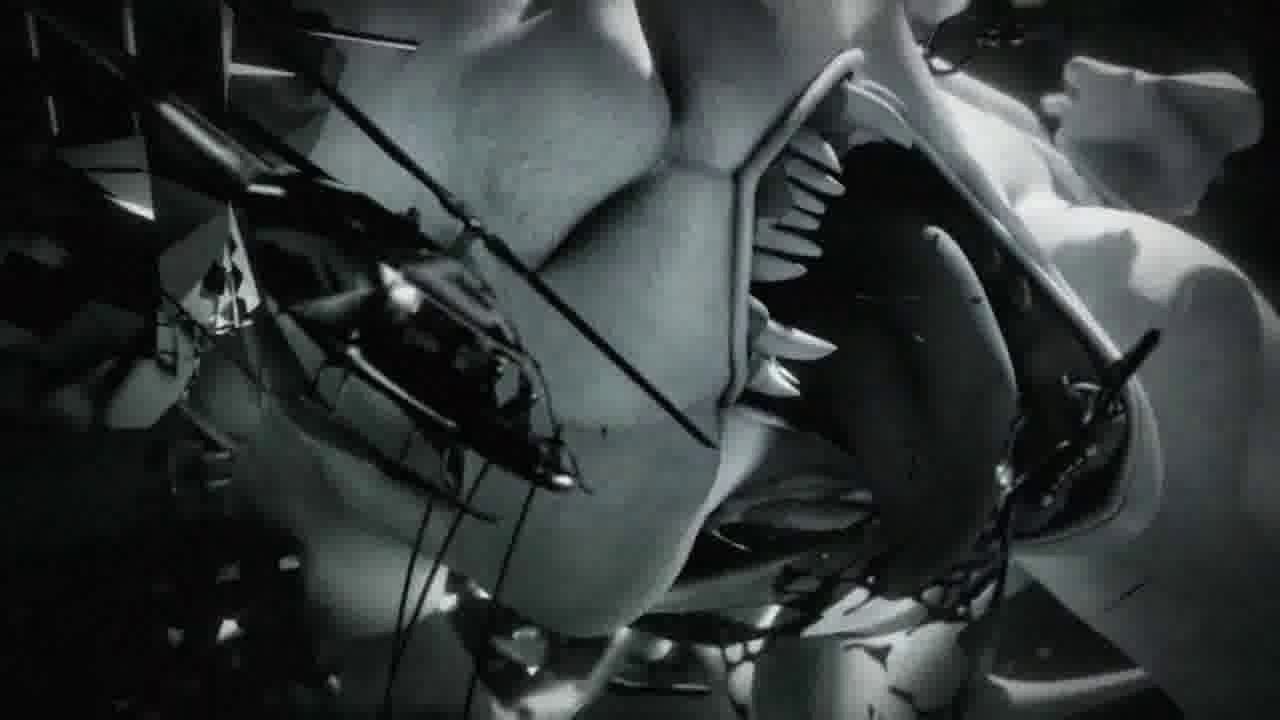

The video consists of a long single take: a slow virtual crane shot, passing over a bleak and dense landscape. The (simulated) camera moves freely in three dimensions. Sometimes it tracks forward; at other times it pivots sideways, or spirals around slowly. At first, we pass over fracture lines running across a smooth surface, in which vague, blurry shapes are reflected. Then the camera rises, and swoops over a series of abstract geometric forms: multifaceted mineral crystals, or perhaps the polygons that are basic to 3D modeling. But shortly, the camera moves into an urban scene; the polyhedral crystals now congeal into the forms of buildings. We see heavy traffic on skyscraper-lined streets. Human figures are posed in upper-floor apartment windows, having sex or watching the traffic below. The camera then moves through a series of plazas and open squares. Here there are more human figures milling about; often, their forms are not completely rendered, but appear as masses of polyhedrons. There are also robots firing what seem to be huge laser guns.

As the song continues, the urban space through which the camera passes becomes increasingly dense; it is jammed with closely-packed tall buildings, and crisscrossed by overpasses. There is also a lot of wreckage, suspended motionless in mid-air: falling bodies and vehicles, and shards of debris. Nothing moves except for the camera itself, as it swings and swirls around the wreckage. Stabs of light occasionally penetrate the murk. Eventually the camera approaches what seems to be an enormous organic form. The camera circles and pans around this form, and then moves back away from it. From a distance, the form looks vaguely cat-like, with a rounded body, uncertain limbs, and whiskers jutting out just above an open mouth filled with gigantic teeth. It is apparently dead, and surrounded by devastation. Is this the “beast” of which the director speaks? Perhaps the monster has attacked the city, though we do not know for sure. In any case, the video seems to have progressed from the inert to the mechanical to the organic, from sharp angles to curves, and from abstract forms to more concrete ones.

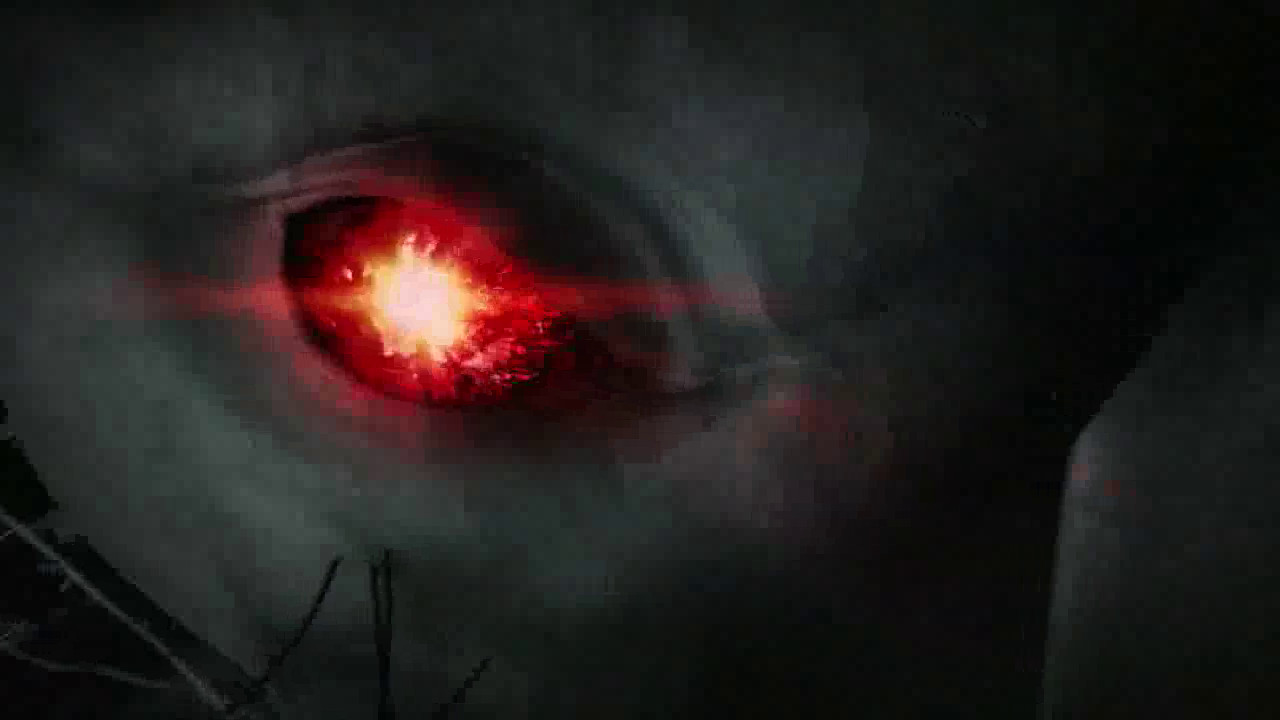

Twice in the course of the video, there is a burst of bright red light. This red is the only touch of color in “Splitting the Atom,” which is otherwise composed entirely in shades of gray. The red first appears at around the 3:56 mark, where it seems to be reflected in, and to glimmer out from, the dead monster’s eye. But the video ends with a second red flash; this time it originates in the eye or head of a distant, skeletal human figure. It glistens there, and then explodes outward to fill the screen. This pulse of red is the last image that we see, aside from the white-letters-on-black of the final credits. Strictly speaking, the explosion of red light is the only event in the course of the video, the only thing that takes time and actually happens. Its brief flashing across the screen is the only movement in the entire video that cannot be attributed to the implicit motion of the virtual camera. The flash seems like a nuclear explosion; it obliterates everything that has come before. Perhaps this is the “catastrophe” of which the director speaks, the “instant” in which “the atom bursts.” In any case, the video is restricted to the “fixed moment,” the “vitrified chaos,” of the explosion’s advent. We see the devastation, but not what leads up to it, nor what comes after. Just as the song refuses us any sense of progression, so the video suspends time in order to explore the space of imminent disaster.

7. Movies vs. 3D Modeling

The video for “Splitting the Atom,” like the song on which it is based, is five minutes and nineteen seconds long. But the time that passes within the video’s diegesis is close to zero. “Splitting the Atom” explores a landscape that has been immobilized, frozen at a single point in time. All motion is halted. People are poised in mid-action. Things have been blown up into little pieces; but the fragments hover in mid-air, never falling to the ground. Each object in the video suffers the fate of the arrow in Zeno’s paradox: arrested in mid-flight, unable to reach its goal. The catastrophe here, like the disaster evoked by Maurice Blanchot, is one that never quite arrives. But this also means that, as Blanchot puts it, “the disaster is its imminence” (1). It is always impending, always about to arrive: which is to say that it never ceases arriving. This also means that the disaster is never over. It is like a trauma: we can never have done with it, and move on. “Splitting the Atom” places us within a heightened present moment; and yet this present seems disturbingly hollow—precisely because it does not, and cannot, pass.

But there is more to the haunted, imploded temporality of “Splitting the Atom.” For Salier’s computer-generated landscape—given all at once, in a single moment of time—is hardly unique. Similar stop-time and slow-time effects have become increasingly common in recent movies, videos, and computer games. What’s noteworthy about these effects is that they are no longer produced by means of traditional cinematic techniques like slow motion and freeze frames. Instead, they rely upon computer-based three-dimensional modeling. This makes it possible to move around within, and freely explore, the space of the slowed or stilled image. The most famous and influential example of this is Bullet Time® in The Matrix (1999). The flight of a bullet shot from a gun is slowed down to such an extent that we can actually locate the bullet, like Zeno’s arrow, at a particular point in space for every moment of its trajectory. At the same time, the camera circles around Neo (Keanu Reeves) as he dodges the bullets. Within the diegesis, Bullet Time® exemplifies Neo’s superhuman powers. But for the audience, the effect is to undermine the “cinematographic illusion” of continuous movement (Deleuze, Cinema 1 1). Time is stopped, and the individual moment is isolated. The bullet or arrow is halted in mid-flight.

Bullet Time® is in fact achieved through the use of multiple cameras, deployed in a full circle around the action. Still images from these cameras are converted into individual movie frames; by choosing among these simultaneous images, the filmmakers are able, as Alexander Galloway puts it, “to freeze and rotate a scene within the stream of time,” and to view the scene, at each moment, from any desired angle (“Tripartite” 14). In this way, Bullet Time® spatializes time. It undoes Bergson’s “concrete flow of duration,” analyzing it into a series of instantaneous stills (Bergson 62). Deleuze argues that, contrary to Bergson’s own prejudices, “cinema does not give us an image to which movement is added, it immediately gives us a movement-image” (Deleuze, Cinema 1 2). That is to say, cinema is inherently Bergsonian, even though Bergson himself failed to recognize this. However, modeling techniques like Bullet Time®, in contrast to traditional cinematic techniques, actually do succeed in reducing duration to “an immobile section + abstract movement,” just as Bergson feared (Deleuze, Cinema 1 2). Reality is decomposed into a series of spatialized snapshots that are only secondarily put back into motion.

Fully computer-generated three-dimensional modeling systems—like the one used to create the “Splitting the Atom” video—go even further than Bullet Time®, as they allow us to move through the rendered space at will in any direction, and to take a view from any point within it. This means, not only that spatiality is unmoored from duration, but also that the presentation of space is no longer governed by, and no longer anchored to, any particular point of view. There is no longer any implicit ideal observer, as was the case in the whole tradition that started with Renaissance perspective and the camera obscura. There is no longer any such thing as a Kantian transcendental subject, for whom space would be the “form of outer sense,” just as time would be the “form of inner sense.” (Kant 80) More generally there can be no “metaphysical subject,” defined as “a limit of the world,” external to the “visual field” that it views (Wittgenstein 57). Three-dimensional rendering, as Galloway says, is fundamentally “anti-phenomenological,” as it is not grounded in “the singular experiences of a central gazing subject or technical eye” (“Tripartite” 11).

8. A Post-Cinematic Ontology

Galloway argues that cinema and three-dimensional modeling represent opposed “ontological systems,” which radically differ in their origins, their presuppositions, and their effects (“Tripartite” 15). Cinema is primarily temporal, whereas modeling is primarily spatial: “time becomes the natural infrastructure of the cinematic image, while spatial representation and visual expression become variables. But with ‘bullet time,’ time becomes the variable, and space is withheld in synchrony” (13). The relation between the observer (or the camera) and the scene being observed is not the same in these two systems: “To create motion one must move the world and fix the camera, but to create three dimensionality one must move the camera and fix the world. . . . In the cinema, the scene turns around you, but in the computer you turn around the scene” (14). In short, these two technologies have diametrically opposed goals: “if the cinema aims to present a world, the computer aims to present a model. The former is primarily interested in movement, while the latter is primarily interested in dimension” (14). Cinema seeks to capture and preserve duration, and thence both the persistence and the mutability of appearances; computer modeling rather seeks to grasp and reproduce the underlying structural conditions that generate and delimit all possible appearance.

Although three-dimensional modeling has become increasingly common in recent Hollywood movies, its fullest development comes in other, post-cinematic media, and most notably in computer games. In The Matrix, Bullet Time® is integrated within, and ultimately works in the service of, cinematic action. As Galloway notes, even as “the time of the action is slowed or stopped . . . the time of the film continues to proceed” (Gaming 66). This means that however much the Bullet Time® sequences stand out by calling attention to themselves as “attractions,” or spectacular special effects, they ultimately return us to the onward thrust of the narrative. But this is not the case with three-dimensional modeling in computer games. For games articulate space, and privilege space over time, in ways that films do not. The duration of a movie is preset. But most computer games do not have any fixed duration. They are organized, instead, around a series of tasks to fulfill, or a quantity of space to explore. They often contain optional elements, which a player is free either to take up or to ignore. To the extent that computer games still have linear narratives, they may have multiple endings; and even if there is only a single ending, getting there depends upon the vagaries of player input. The time it takes to play a game thus varies from session to session, and from player to player.

In other words, games take place in what Galloway calls “fully rendered, actionable space,” which must already exist in advance of the player’s entry into it (63). For Galloway, “game design explicitly requires the construction of a complete space in advance that is then exhaustively explorable without montage” (64, emphasis added). Gamespace may in fact be composed of many heterogeneous elements; but these elements are fused together without gaps or cuts. As Lev Manovich puts it, the production of digital space involves

assembling together a number of elements to create a singular seamless object. . . . Where old media relied on montage, new media substitutes the aesthetics of continuity. A film cut is replaced by a digital morph or digital composite. (139; 143)

Gamespace requires the use of digital compositing in order to produce continuity; as Galloway says, “because the game designer cannot restrict the movement of the gamer, the complete play space must be rendered three-dimensionally in advance” (Gaming 64). Gamespace is therefore abstracted, modeled, and rendered, rather than—as is the usual case in cinema—constructed or revealed through the montage and juxtaposition of indexical fragments.

How does this all relate to “Splitting the Atom”? The video is more like a movie than like a game, in that it does not allow for any sort of user input or initiative; its presumptive audience is still the traditionally passive spectator-auditor of the cinema.[9] Nonetheless, “Splitting the Atom” does not engage in cinematic narration, and does not employ montage. Instead, it presents and explores an abstract, seamless virtual space that has been wholly rendered in advance. Insofar as it freezes time, the video works—in the spirit of Galloway’s post-cinematic ontology—by moving the camera and fixing the world. In “Splitting the Atom,” time is neither expressed through action (as in the films of Deleuze’s movement-image), nor presented directly as a pure duration (as in the films of Deleuze’s time-image). Rather, time is set aside as a dependent variable. To this extent, “Splitting the Atom” conforms to Galloway’s post-cinematic ontology.

However, this conclusion only applies to the images of “Splitting the Atom,” ignoring the music. Galloway, like Manovich and Rodowick, makes his argument in predominantly visual terms, and pays little attention to sound. It is crucial to Galloway’s claims that “rendered, actionable space” is entirely reversible: you can move through it at will, or have the camera “turn around the scene” in any direction. But even if this is the case for the space defined by the video images, it does not hold for the audio filling that space, or playing along with it. Chion reminds us that all sounds and noises, except for pure sine waves, are “oriented in time in a precise and irreversible manner. . . . Sounds are vectorized,” in a way that visual images need not be (19). I have noted that the song “Splitting the Atom” is largely static, without narrative or climax; but it still has a single, linear direction in time. The song does not progress on the macro-levels of melody, harmony, and rhythm; but its notes are oriented on the micro-level, moment to moment, by unidirectional patterns of attack and decay, resonance and reverberation.

The ontology of audiovisual post-cinematic media is therefore more complicated than Galloway’s model allows for. Time is indeed suspended, or spatialized, in the diegetic world of “Splitting the Atom.” We are presented with a “fully rendered, actionable space” that must be presupposed as existing all at once. But despite the suspension of time within this space, it still takes time for the virtual camera to explore the space. Paradoxically, it still takes time to present to the spectator-auditor a space that is itself frozen in time. This time of exposition is a secondary and external time, defined not by the visuals, but by the “vectorized” soundtrack. Just as the song is not heard within the diegetic world of the video, so the virtual camera movement does not “take place,” or have a place, within this world. Instead of time as “inner sense,” we now have an exterior time, one entirely separate from the time-that-fails-to-pass within the video’s rendered space. It’s telling that (as I have already noted) the only actual event in the course of the video is the explosion at the end. For this violent red flashing does not take place within the video’s rendered space; rather, it obliterates that space altogether. The absence of time is the imminence of catastrophe. Time is eliminated from the world of the video—and more generally, from the world of three-dimensional modeling, which is also the world of “late capitalism,” or of the network.[10] But a certain sort of irreversible temporality returns anyway, acousmatically haunting the space from which it has been banished. We might think of this, allegorically, as time’s revenge upon space, and sound’s revenge upon the image.

9. Conclusions

“Splitting the Atom” is somewhat unusual among music videos, with its full-fledged three-dimensional modeling. But there are many other processes and special effects in current use in electronic and digital media that also work to generate new audiovisual relations.[11] For instance, consider the slitscan technique,[12] in which slices of successive frames are composited together, and put on screen simultaneously. The result is that the video’s “timeline [is] spread across a spatial plane from left to right” (blankfist).[13] A slitscan video sequence looks something like a wipe, except that, instead of transitioning from one shot to another, it transitions from earlier to later segments of the same shot. In this way, time is quite literally spatialized, smeared across the space of the screen. The image ripples and flows, and the sound is divided and multiplied into a series of fluttering echoes and anticipations, slightly out of phase with one another.[14] The result is almost synesthetic, as if the eyes were somehow hearing the images in front of it.

More generally, new audiovisual relations are even produced by music videos that use more common techniques, like “the stroboscopic effect of the rapid editing” described by Chion (166), and the manic compositing of images in a way that “openly presents the viewer with an apparent visual clash of different spaces” described by Manovich (150). Consider, for instance, Melina Matsoukas’ video for Rihanna’s “Rude Boy” (2010), with its overt two-dimensionality, its fast cuts, and its deployment of multiple cut-out images of the singer against brightly colored backgrounds of abstract patterns and graffiti scrawls. In this music video, as in so many others, we find what Chion calls “a joyous rhetoric of images,” which “creates a sense of visual polyphony and even of simultaneity” (166). When time is spatialized, as it is here through the song’s verse-and-chorus structure and insistently repetitive beats, images themselves are no longer linear, but enter into the configurations typical of McLuhan’s acoustic space, with its “discontinuous and resonant mosaic of dynamic figure/ground relationships” (McLuhan and McLuhan 40).

Beyond the movement-image that Deleuze ascribes to classical cinema, and the time-image (or pure duration, un peu de temps à l’état pur) that he ascribes to modernist cinema, we now encounter a third image of time (if we can still call it an “image”): the extensive time, or hauntological time, of post-cinematic audiovisual media.[15] In the last several decades, we have passed what McLuhan calls a “break boundary”: a critical point “of reversal and of no return,” when one medium transforms into another (38). We have moved out of time and into space, but the consequent spatialization of time need not have such bleak consequences (homogenization, mechanization, reification) as Bergson and Deleuze both feared, and as film theorists like Rodowick still fear today. Instead, it may well be that postmodern spatialization permits a fully audiovisual medium “worthy of the name” to flourish as never before (141-56).

Works Cited

Bell, Daniel. The Cultural Contradictions of Capitalism. New York: Basic, 1996. Print.

Benjamin, Walter. Selected Writings, Volume 4. 1938-1940. Eds. Howard Eiland, Michael W. Jennings. Trans. Edmund Jephcott, et al. Cambridge: Belknap-Harvard UP, 2003. Print.

Bergson, Henri. An Introduction to Metaphysics. Trans. T. E. Hulme. New York: Putnam, 1912. Print.

Blanchot, Maurice. The Writing of the Disaster. 1980. Trans. Ann Smock. Lincoln: U of Nebraska P, 1995. Print.

blankfist. “Dancing on the Timeline: Slitscan Effect.” 2010. Videosift. Web.

Breihan, Tom. “Album Review: Massive Attack, Splitting the Atom.” 2009. Pitchfork. Web.

Burroughs, William S. The Soft Machine. New York: Grove, 1966. Print.

Castells, Manuel. The Rise of the Network Society. 2nd ed. Vol. 1. The Information Age: Economy, Society, and Culture. Cambridge: Blackwell, 2000. Print.

Chion, Michel. Audio-Vision: Sound on Screen. Trans. Claudia Gorbman. New York: Columbia UP, 1994. Print.

DeLanda, Manuel. Intensive Science and Virtual Philosophy. New York: Continuum, 2002. Print.

Deleuze, Gilles. Cinema 1: The Movement-Image. Trans. Hugh Tomlinson and Barbara Habberjam. Minneapolis: U of Minnesota P, 1986. Print.

—. Cinema 2: The Time-Image. Trans. Hugh Tomlinson and Robert Galeta. Minneapolis: U of Minnesota P, 1989. Print.

Derrida, Jacques. Of Grammatology. Trans. Gayatri Chakravorty Spivak. Baltimore: Johns Hopkins UP, 1998. Print.

Doane, Mary Ann. “The Voice in the Cinema: The Articulation of Body and Space.” Yale French Studies 60 (1980): 33-50. Print.

Eisenstein, Sergei. Film Form: Essays in Film Theory. Trans. Jay Leyda. New York: Harcourt, 1949. Print.

Galloway, Alexander R. Gaming: Essays on Algorithmic Culture. Minneapolis: U of Minnesota P, 2006. Print.

—. “On a Tripartite Fork in Nineteenth-Century Media, or an Answer to the Question ‘Why Does Cinema Precede 3D Modeling?’” Wayne State University, 2010. Lecture.

Gorbman, Claudia. Unheard Melodies: Narrative Film Music. Bloomington: Indiana UP, 1987. Print.

Hägglund, Martin. Radical Atheism: Derrida and the Time of Life. Stanford: Stanford UP, 2008. Print.

James, William. The Principles of Psychology. 1890. Cambridge: Harvard UP, 1983. Print.

Jameson, Fredric. Postmodernism, Or, The Cultural Logic of Late Capitalism. Durham: Duke UP, 1991. Print.

Kant, Immanuel. Critique of Pure Reason. 1781. Trans. Werner Pluhar. Indianapolis: Hackett, 1996. Print.

Levi, Golan. “An Informal Catalogue of Slit-Scan Video Artworks and Research.” 2010. Flong. Web.

Malabou, Catherine. What Should We Do with Our Brain? Trans. Sebastian Rand. New York: Fordham UP, 2008. Print.

Manovich, Lev. The Language of New Media. Cambridge: MIT P, 2001. Print.

McLuhan, Marshall. Understanding Media: The Extensions of Man. Cambridge: MIT P, 1994. Print.

McLuhan, Marshall, and Quentin Fiore. The Medium is the Massage. New York: Bantam, 1967. Print.

McLuhan, Marshall, and Eric McLuhan. Laws of Media: The New Science. Toronto: U of Toronto P, 1988. Print.

Mulvey, Laura. Death 24x a Second: Stillness and the Moving Image. London: Reaktion, 2006. Print.

Platt, Charles. “You’ve Got Smell!” Wired. 1999. Web.

Rodowick, David. Gilles Deleuze’s Time Machine. Durham: Duke UP, 2003. Print.

—. The Virtual Life of Film. Cambridge: Harvard UP, 2007. Print.

Shaviro, Steven. Connected, Or, What It Means To Live in the Network Society. Minneapolis: U of Minnesota P, 2003. Print.

—. “Emotion Capture: Affect in Digital Film.” Projections 2.1 (2007): 37-55. Print.

—. Post-Cinematic Affect. London: Zero, 2010. Print.

van Zon, Harm. “Edouard Salier: Massive Attack ‘Splitting the Atom’.” 2010. Motionographer. Web.

Wittgenstein, Ludwig. Tractatus Logico-Philosophicus. 1921. Trans. D.F. Pears and B.F. McGuinness. New York: Routledge, 2001. Print.

Notes

[1] As David Rodowick explains, the word “heautonomous,” taken from Kant’s Third Critique, “means that image and sound are distinct and incommensurable yet complementary” (Gilles Deleuze’s Time Machine 145). Heautonomous components are not entirely autonomous from one another, but neither are they inextricably determined by one another.

[2] I discuss the question of the “post-cinematic” at greater length in Post-Cinematic Affect.

[3] Nor does this necessarily mean that we are about to enter an age of olfactory cinema and olfactory computing. We should not entirely dismiss the notion of olfactory media, however. Recall, for instance, the analog smell technology, Odorama®, in John Waters’s 1981 film Polyester. More recently, there has been at least some research into the possibilities of digital smell synthesis (Platt).

[4] Chion warns that this formulation “might be an oversimplification”; but he nonetheless maintains that it is generally valid.

[5] Some theorists argue for the existence of a “point of audition,” as the sonic analogue of a visual point of view. But Chion warns us that “the notion of a point of audition is a particularly tricky and ambiguous one . . . it is not often possible to speak of a point of audition in the sense of a precise position in space, but rather of a place of audition or even a zone of audition” (89-91). Furthermore, for us to associate a sound with a particular on-screen auditor, a “visual representation of a character in closeup” is needed in order to enforce this identification (91). For these reasons, sound does not allow for a specific point of audition, in the way that visual imagery does for a point of view.

[6] Rodowick emphasizes the “fundamental separation of inputs and outputs” in digital media, and the way that “digital acquisition quantifies the world as manipulable series of numbers” (113; 116). In partial opposition to Rodowick, I discuss the question of indexicality in digital media at greater length in my article “Emotion Capture: Affect in Digital Film.”

[7] Rodowick three times cites Babette Mangolte’s question, “Why is it difficult for the digital image to communicate duration?” (53; 163; 164).

[8] Rodowick is citing Roland Barthes here.

[9] This statement needs to be qualified slightly, because “Splitting the Atom” is not made to be viewed, and for the most part is not viewed, in a movie theater. Most people see it on a home video monitor or computer screen. However, these days we often tend to watch movies on home video monitors or computer screens as well. The important point is that “Splitting the Atom,” like most movies, is non-interactive, and does not feature any user controls beyond the basic ones built into any DVD player or computer video program.

[10] These are large generalizations, which I evidently lack the space to explain, let alone justify, here. I refer the reader to the extended discussion of these matters in my books Connected and Post-Cinematic Affect.

[11] Many of these effects, along with things like rotoscoping and three-dimensional modeling, in fact precede the development of digital technology. But digital processing makes them so much easier to accomplish, that in the last twenty years or so they have moved from being difficult and infrequently-used curiosities to accessible options in any digital editor’s toolbox.

[12] This technique is described in great detail in Wikipedia, “Slit-scan Photography.” See also Levi.

[13] This page also links to a video in which a scene from Singin’ in the Rain is transformed by the slitscan technique.

[14] Strictly speaking, slitscan is an image-processing technique. But if the sound is broken into small units, each of which corresponds to a “specious present” or atom of attention, and these are then coordinated with the slitscan-processed flow of images, this will lead to the auditory results that I have described.

[15] I suggest these names only as stopgaps; perhaps better ones can be found. Extensive time refers to the way that time is spatialized, only to return at the very heart of the new configurations of space. Hauntological time refers to the way that other, revoked temporalities return in the suspension of the present. Both of these names have resonances in the work of Jacques Derrida, and in particular in Martin Hägglund’s recent reading of temporality in Derrida.

Steven Shaviro is the DeRoy Professor of English at Wayne State University. He is the author of, among other works, The Cinematic Body, Post-Cinematic Affect, and “Melancholia, or, The Romantic Anti-Sublime.”

Steven Shaviro, “Splitting the Atom: Post-Cinematic Articulations of Sound and Vision,” in Denson and Leyda (eds), Post-Cinema: Theorizing 21st-Century Film (Falmer: REFRAME Books, 2016). Web. <https://reframe.sussex.ac.uk/post-cinema/3-4-shaviro/>. ISBN 978-0-9931996-2-2 (online)